An Introduction to Post-Quantum Cryptography

A viewpoint on cryptography in a future, post-quantum IoT security environment

It’s an exciting time to be working on quantum technologies; finally, quantum computers are nearly here. For some time, its proponents have claimed with some certainty that the first commercially available quantum computers will be made within the next twenty years, but successes such as those by teams at IBM, DWave, and most recently Google indicate that this time the belief can be put down to more than just optimism and sales pitches. Although it is too soon to understand the implications of a device truly capable of harnessing quantum effects, it is doubtless that we will see advances in fields where the power of a quantum computer to understand patterns in large swathes of data can be properly exploited, such as data science.

Unfortunately, not everyone gets to view the arrival of quantum computers with nothing but excitement. Cryptographers, the practitioners responsible for protecting people’s data and privacy on the internet and beyond, are preparing to see decades of well-tuned techniques for hiding data become invalidated by the first wave of sophisticated quantum machines. Fortunately, preparing is the operative word here. An algorithm developed by Peter Shor in the mid 90’s demonstrated that quantum computers will be capable of solving the famous ‘integer factorization’ problem, the security backbone of the famous RSA cryptosystem, dramatically faster than our modern computers can. Even worse, the main building block of Shor’s algorithm, the Quantum Fourier Transform, can be adapted to break most other commonly implemented cryptography, such as Diffie-Helman key exchange and Elliptic Curve Digital Signatures. So, the question is: what have cryptographers been doing over the last twenty years to prepare for this eventuality? In short, they’ve developed the subject of Post-Quantum Cryptography (PQC), the study of cryptography that can be run on classical computers while remaining resistant to quantum attacks.

Cryptography 101

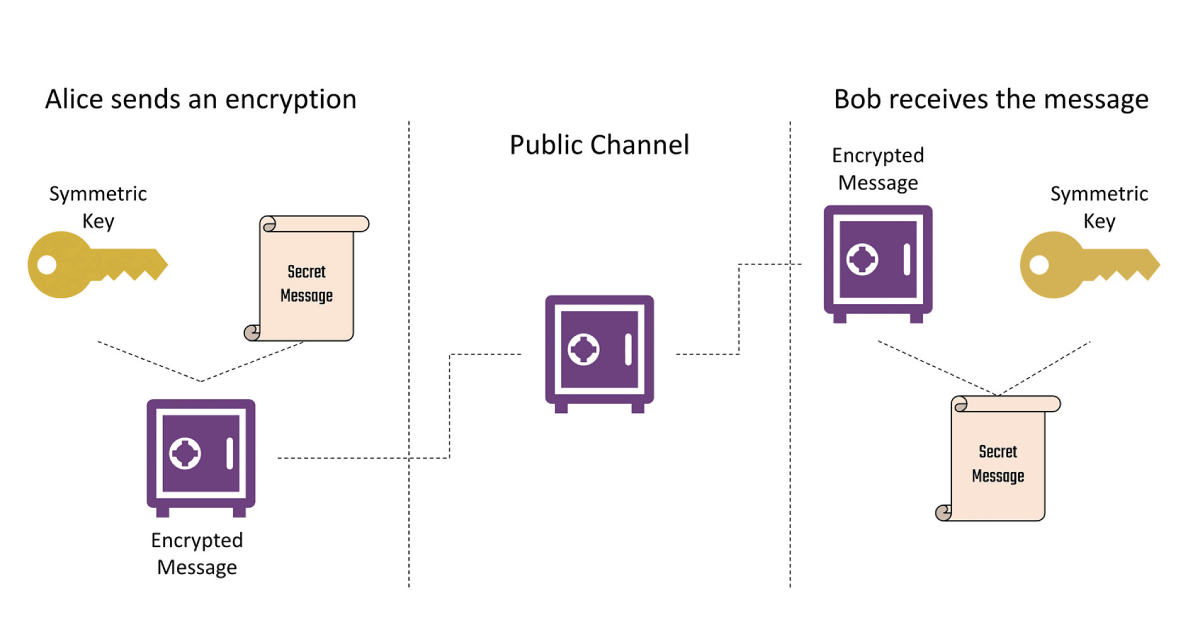

Before we get to post-quantum cryptography, we should first understand some basics of cryptography and what it means for a quantum computer to break it. Traditionally, cryptographers have sought to solve variants of the following problem: Alice wants to send a message – “I hid the treasure in the library” – to her friend Bob, but she has no private channel through which to send this message. Furthermore, she is worried that Eve may try to intercept her secret message and steal the treasure. So what can Alice do? This is where encryption comes in. Let’s say last time Alice and Bob were together, they knew that they might want to send messages to each other after Alice had hidden the treasure. So, they agreed on a key as a way to do this in secret. Now, when Alice wants to send a message to Bob she uses this key to encrypt her message (imagine putting the message in a locked box), and once it arrives with Bob he merely decrypts using the same key (unlocking the box). If Eve manages to intercept their communication, she is unable to unlock the box since she does not have the key. Because Alice and Bob share the same key, the one that they agreed upon before, we call this symmetric cryptography.

Mathematically, encrypting a message constitutes using the key to garble Alice’s message into a string of nonsense -“vrbayebcjaelaiyurbjeaby!” – that can only be unwrapped into Alice’s message by using her and Bob’s key. Eve, successfully intercepting the message but lacking the key, will find herself unable to make any sense of what Alice has sent.

Of course, there is an obvious problem with this set up. What if Alice and Bob need to have a secret conversation without being able to meet and agree on a key in advance? Here, we come across the more involved techniques of Public Key Cryptography. To set up an exchange, Bob makes himself a pair of keys: a public key and a private key. The public key he sends out in to the world, and can be thought of as an encryption key for sending messages to Bob. When Alice wants to send a message to Bob, she looks up his public key and uses it to encrypt her message. Since Eve only has access to the public encryption key, if she manages to intercept Alice’s message she will still only see nonsense. Once the message is received by Bob he now uses his private key, essentially a decryption key, to unwrap and read Alice’s communication.

In practice, parties are unlikely to have been able to agree on a key in advance – think about how often you have to input your bank details to make a purchase on a website you’ve never visited before. Consequentially, secure techniques for Public Key Cryptography are of the utmost importance for internet security. Unfortunately, building public key schemes turns out to be quite hard, and they usually end up running pretty slowly when you want to send a lot of information. Luckily, this has an easy fix. Alice and Bob begin by using a public key scheme, or a similar technique known as a key exchange protocol, to secretly communicate one small message, which is merely a shared key. They then use this shared key with a well-established symmetric scheme, typically a scheme called AES, to continue their secret communications without the overhead required in public key techniques. This is how common internet standards such as TLS suggest secure communication be done in practice.

Robust symmetric cryptography in the form of AES has been around since the 90’s, and when correctly implemented is viewed as extremely secure. Hence, the potential weak point in this set up lies in the public key stage. How secure is a public key scheme? Famously, it is possible to base the security of public key cryptography, the difficulty of breaking a scheme, on computational mathematical problems. The most well-known example of this is of course the RSA scheme. In RSA, Bob’s public key is some large integer n, and his secret key is some extra information about n, namely that it can be factorized as the product of two prime numbers p and q, so that pq = n. To send a message to Bob, Alice can encrypt it using n in such a manner that one needs p and q to decrypt the message. This is easy for Bob, since he knows these prime numbers, but an intercepting Eve must be able to recover them by factorizing the public key n. So, Eve’s task is precisely the ‘integer factorization’ problem solved by Shor’s algorithm, which can’t be solved efficiently on today’s computers provided that p and q are big enough. Other common public key and key exchange techniques derive their security from different hard mathematical problems, for example the discrete logarithm problem, in the same sense – the scheme is shown to be as hard to break as it is hard to solve the problem.

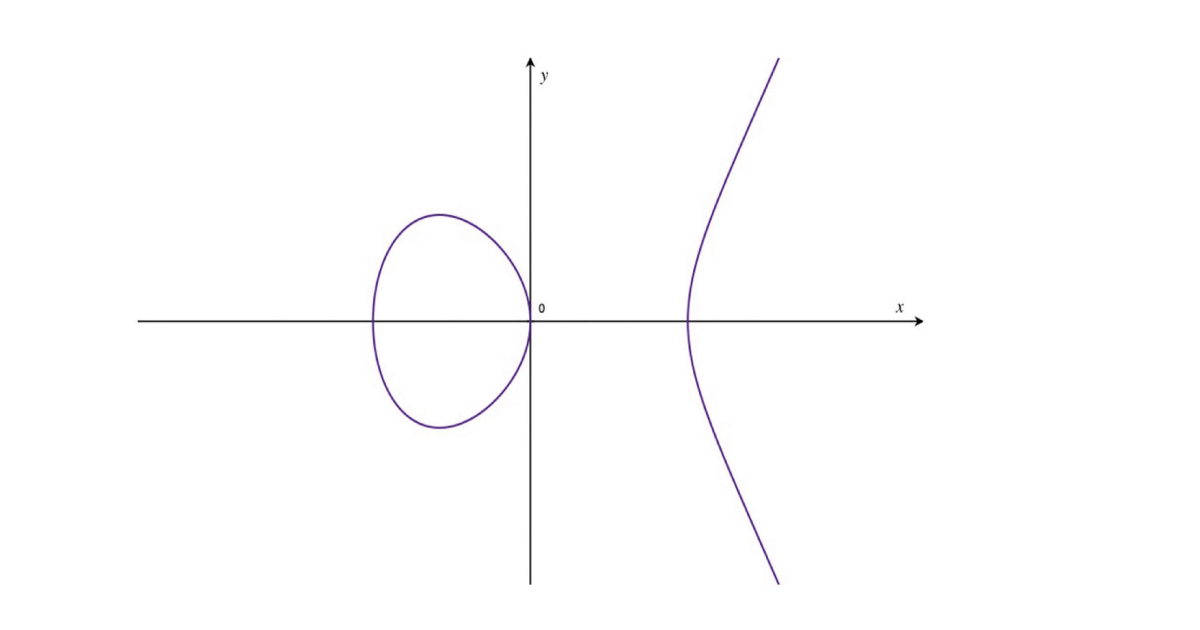

One thing we have not touched on yet is the problem of authentication: when Bob receives a message from Alice, how can he be sure that it was really sent by Alice, and that it was really what Alice meant to say? After all, if Eve is capable of intercepting messages, might she also be capable of tampering with them before they get to Bob? Especially in the age of internet communication, it can be hard to trust that every message came from who it claims to be from. To solve this, we rely on the conveniently named topic of digital signatures, which work much like their real world namesake. Alice uses a private key, her power to sign messages, to attach a signature to her message to Bob that tells Bob that it was Alice who sent this message. Additionally, the signature summarises its intended contents in such a way that if the content is tampered with then the signature would appear incorrect. Upon receiving this message, Bob can look at Alice’s corresponding public key, which tells him what Alice’s signature looks like, to check that Eve has not interfered with the intended message and that it originated from Alice. Similarly to encryption, cryptographic techniques for this also rely on complicated mathematics, where the difficulty of forging Alice’s signature corresponds to the hardness of solving a computational problem. For example, to forge a signature in a commonly used signature scheme known as ECDSA, Eve must be able to solve the discrete logarithm problem over something known as an elliptic curve.

Quantum Computing and Hard Problems

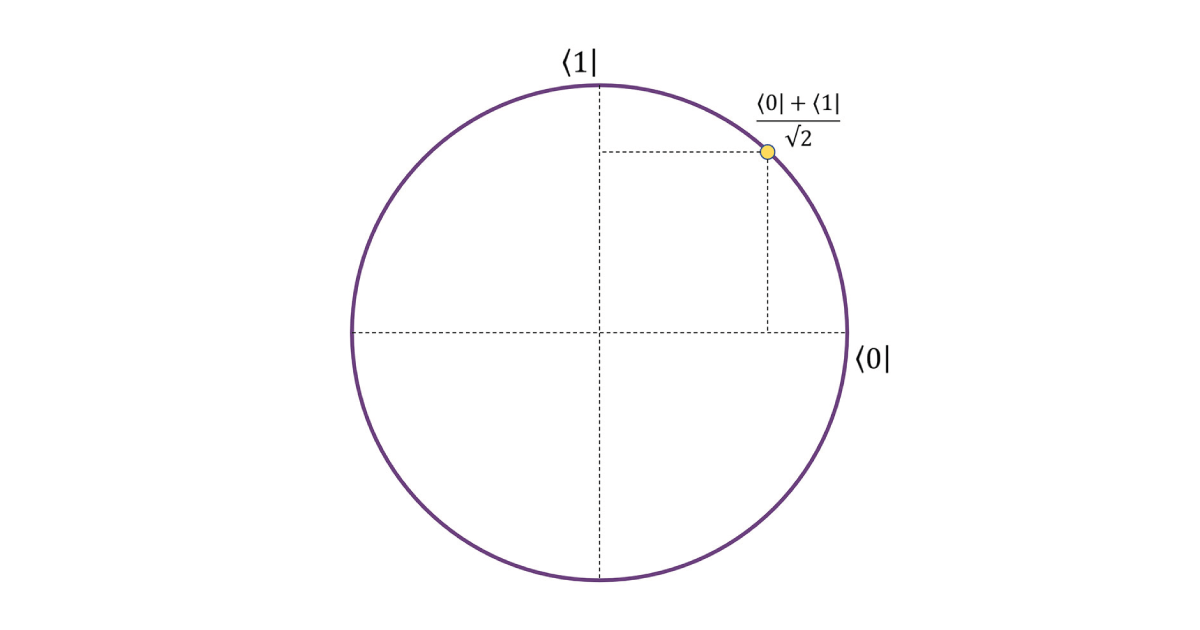

Although a complete explanation of the mechanisms of a quantum computer would fill up a blog post by itself, a brief summary will help us understand the ideas behind making quantum resistant cryptography. Remember, to build post-quantum public key schemes we are looking for mathematical problems that even a quantum computer cannot crack. Quantum computers are a lot like classical computers, except that they replace gates on classical bits, an object that is in either a 0 or 1 state, with operations on a quantum variant called a qubit. A qubit represents a point on the unit circle, a circle of radius 1, and a quantum gate represents a mapping from a point on the unit circle to another. The tricky part is that when you try to read a qubit it doesn’t tell you where it lies on the unit circle. Instead, it collapses randomly into a 0 or 1 state, with probability of 0 or 1 depending on its position on the circle.

Due to their more complicated nature, qubits are able to represent and store much more information than a regular bit. This does not mean they’re better at all tasks, and researchers are starting to develop an understanding of what tasks they are more suited to. As a helpful piece of intuition, one can imagine a quantum computer as being able to ‘parallelize’ tasks very cheaply. That is, if a task can be broken into several smaller subtasks that can be done at the same time, then a quantum device is adept at doing each of these smaller tasks at once. Relative to a classical device that must do each task sequentially, the quantum computer will save itself a lot of time. Consider prime factoring, for example: a quantum computer could just split the possible factors of into groups, and then in parallel check whether any of the numbers in each group divide , rather than having to start from 1 and check each number sequentially. Contrast this with tasks that require constantly updating the same object – these are tasks where quantum computers may not perform much better than classical computers. In fact, the security of common symmetric schemes like AES rely on ‘mixing’ objects together, so that to break the encryption of a message you have to manually perform a bunch of unmixing steps. This idea explains why the focus of PQC is primarily on updating public key cryptography – quantum computers are not dramatically better at unmixing symmetric encryptions than classical computers are, so the majority of symmetric constructions need only slightly alter their parameters to still be considered secure.

Candidate Quantumly Hard Problems

Armed with this knowledge about what makes a problem hard for a quantum computer, we can take a quick look at the leading candidates for computational problems in PQC. There is some blue-sky research out there, but the bulk of the suggested PQC schemes lie in one of four major categories: lattice, code-based, multivariate, and isogeny-based cryptography.

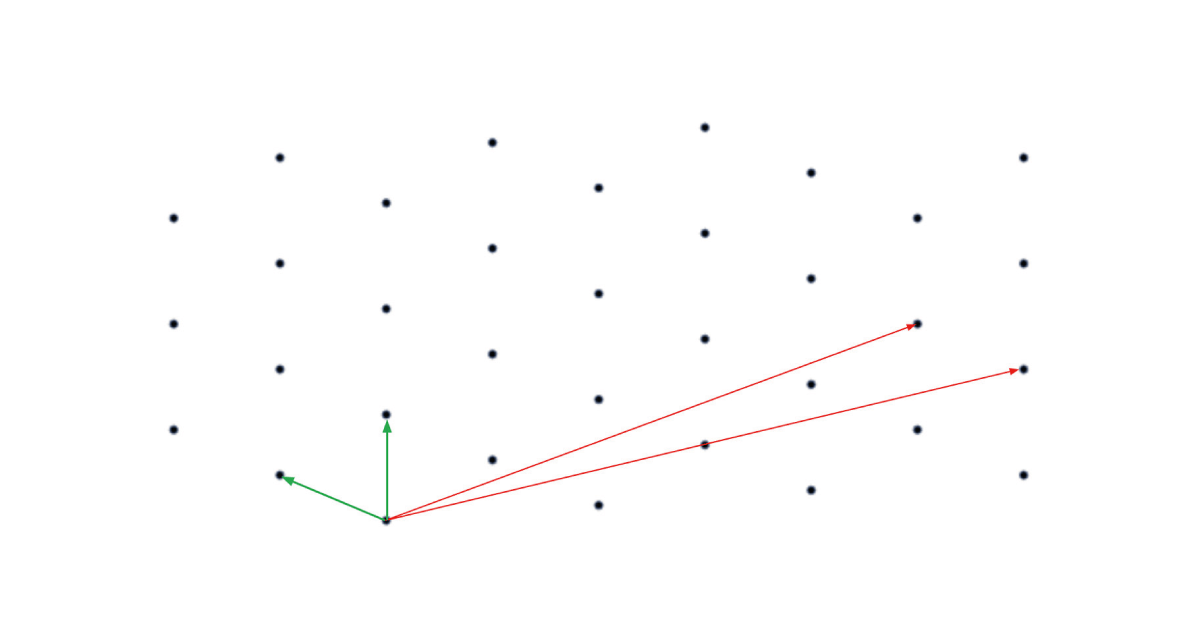

First up is the most popular category, lattice cryptography. A lattice can be understood as a regular grid of points in space, where the points on the lattice are chosen systematically from an object called its basis, which describes the lattice by explaining how you move between lattice points.

Lattices are classical mathematical objects, and computationally hard problems on them have been studied since long before even the RSA cryptosystem existed, which lends some confidence to the notion that these problems should remain hard even in the face of quantum attacks. The archetypal hard lattice problem is the shortest vector problem: upon receiving the basis for a lattice, the solver is asked to find the lattice point closest to the origin. Although easy for small lattices such as the one depicted above, when the lattice is an object in hundreds of dimensions this problem becomes very taxing. The backbone of building cryptography from lattice problems comes from an interesting observation about defining a lattice from a basis. A basis used to construct a lattice is not unique, and it is possible to have a ‘good’ and a ‘bad’ basis of the same lattice. For example, in the diagram above all lattice points can be reached by starting at 0 then walking along combinations of either the red or the green lines, called vectors. If you’ve got the green basis, it is easy to quickly understand the shape of the lattice, since your vectors are short and nearly at 90 degrees to each other. Once you understand the lattice well, you can just read off the shortest vector. Conversely, using the red lines you have to do a lot of wiggling to find points close to 0, and even once you’ve found one how would you verify that there’s nothing shorter? Lattice-based cryptosystems harness this idea to build cryptography – at a high level, the idea is to use the bad basis for a lattice as a public key and a good basis for the same lattice as a private key. The bad basis describes the lattice well enough to hide the message as a hard problem on the lattice. Then, only the intended recipient can solve this problem easily, because that requires access to a good basis. Lattice cryptography tends to be the most flexible category of PQC, since there is a lot of design space to work with in terms of how one chooses lattices and hides points. This flexibility has led to lattices being applied successfully to the problems of both encryption and digital signatures.

Code-based cryptography is the oldest of the four main families, dating back to the development of the McEliece cryptosystem in 1978. It is based on the field of coding theory, which is the study of how to successfully transfer information over an unreliable channel. To send information in this way, you use something called an error-correcting code, where a message is encoded in a manner that tolerates a certain amount of mistaken data arising from the unreliable channel, with the recipient still being able to recover the intended message. The private key for a cryptosystem based on this is just a well-chosen error-correcting code, and the corresponding public key is a recipe for encoding a message to be decoded. Encryption is simple – the sender performs the usual encoding process for the message and then simulates an unreliable channel by manually inputting some errors. As long as there are enough errors, an adversary without access to the description of the error-correcting code can not recover the message, whereas the intended recipient is able to decode it by using their error-correcting code. The nice part about these schemes is that McEliece has been around a long time, and consequentially there is a great degree of confidence in the security of it and schemes resembling it. On the other hand, McEliece has been around a long time and no one uses it – it has very large public keys, which can make it a little slow, and it generally compares poorly with RSA in a pre-quantum era.

Multivariate cryptography is based on the problem of solving systems of multivariate polynomial equations, typically multivariate quadratic ones. That is, a system of equations that looks like this:

Although many existing forms of elliptic curve cryptography, such as ECDSA, are vulnerable to quantum computers, this is a consequence of the specific nature of the algorithms and not a weakness of elliptic curves themselves. To base cryptography on isogenies, we use the following observation: given two elliptic curves E1 and E2, it is hard to find an isogeny φ that sends E1 to E2. So, a public key could be a pair of elliptic curves, with the private key a corresponding isogeny φ: E1 -> E2. Now, the possessor of the private key can use the isogeny φ to compute operations involving E1 and E2 that are intractable for someone without access to φ, and as usual the public pair E1 and E2 can be used to set up these problems. Although the field of isogeny-based cryptography is still in its relative infancy, there is substantial excitement about it within the cryptographic community, especially following successful experiments on using isogeny-based schemes in internet protocols. Additionally, an impressively efficient signature scheme called SQISign, based on a novel problem within isogeny based cryptography, has been given a Best Paper award at this year’s Asiacrypt conference.

Towards Standardization: The NIST Process

Given all these competing ideas, it can be hard to see where the future of cryptography lies. One place to turn to for guidance is the Post-Quantum Standardization process run by the National Institute of Standards and Technology (NIST) of the United States. NIST have previously run processes to standardize symmetric cryptography and hash functions, which resulted in the widely used AES and SHA3 primitives respectively. Although not an official global standard, it is likely that any algorithm supported by NIST will receive levels of uptake similar to those of AES and SHA3, so examining this process should provide a state-of-the-art understanding of the likely future of PQC. NIST have been keen to make clear that this should be considered a process and not a competition with a ‘winner’, so they may choose to standardize or suggest multiple algorithms for different use cases if there is no clear best technique. The two main features NIST are looking for are security and efficiency: if a protocol isn’t secure then there’s no point in using it to protect data, and if it isn’t efficient then it would slow down internet communications. In general, PQC tends to perform slower than its pre-quantum counterpart, so an algorithm can be considered efficient if there is little to no drop in performance when using it in place of an existing pre-quantum scheme.

The process was initiated by NIST in 2016, in the form of an open call to the cryptographic community asking for proposals for post-quantum key-encapsulation mechanisms (essentially asymmetric encryption schemes intended to send a symmetric key) and signature schemes, with parameter settings suitable for a variety of practical use cases. This open nature is a major feature of the NIST process; there’s a public google group containing detailed discussions on specific candidate schemes as well as more general features of the process. 69 candidates were initially submitted, although a handful quickly withdrew after mistakes were discovered, and in January 2019 NIST ended the first round of the process, announcing the 26 schemes that had made it into round two. In July 2020, after nearly 18 months of community focus on these schemes, NIST announced its final round candidates: 4 key-encapsulation mechanisms and 3 signature schemes, with the intention of choosing at least one of each type at the end of the process. Although these schemes have all been thoroughly vetted, they each come with different strengths and weaknesses that are useful to understand.

Although many existing forms of elliptic curve cryptography, such as ECDSA, are vulnerable to quantum computers, this is a consequence of the specific nature of the algorithms and not a weakness of elliptic curves themselves. To base cryptography on isogenies, we use the following observation: given two elliptic curves E1 and E2, it is hard to find an isogeny φ that sends E1 to E2. So, a public key could be a pair of elliptic curves, with the private key a corresponding isogeny φ: E1 -> E2. Now, the possessor of the private key can use the isogeny φ to compute operations involving E1 and E2 that are intractable for someone without access to φ, and as usual the public pair E1 and E2 can be used to set up these problems. Although the field of isogeny-based cryptography is still in its relative infancy, there is substantial excitement about it within the cryptographic community, especially following successful experiments on using isogeny-based schemes in internet protocols. Additionally, an impressively efficient signature scheme called SQISign, based on a novel problem within isogeny based cryptography, has been given a Best Paper award at this year’s Asiacrypt conference.

Towards Standardization: The NIST Process

Given all these competing ideas, it can be hard to see where the future of cryptography lies. One place to turn to for guidance is the Post-Quantum Standardization process run by the National Institute of Standards and Technology (NIST) of the United States. NIST have previously run processes to standardize symmetric cryptography and hash functions, which resulted in the widely used AES and SHA3 primitives respectively. Although not an official global standard, it is likely that any algorithm supported by NIST will receive levels of uptake similar to those of AES and SHA3, so examining this process should provide a state-of-the-art understanding of the likely future of PQC. NIST have been keen to make clear that this should be considered a process and not a competition with a ‘winner’, so they may choose to standardize or suggest multiple algorithms for different use cases if there is no clear best technique. The two main features NIST are looking for are security and efficiency: if a protocol isn’t secure then there’s no point in using it to protect data, and if it isn’t efficient then it would slow down internet communications. In general, PQC tends to perform slower than its pre-quantum counterpart, so an algorithm can be considered efficient if there is little to no drop in performance when using it in place of an existing pre-quantum scheme.

The process was initiated by NIST in 2016, in the form of an open call to the cryptographic community asking for proposals for post-quantum key-encapsulation mechanisms (essentially asymmetric encryption schemes intended to send a symmetric key) and signature schemes, with parameter settings suitable for a variety of practical use cases. This open nature is a major feature of the NIST process; there’s a public google group containing detailed discussions on specific candidate schemes as well as more general features of the process. 69 candidates were initially submitted, although a handful quickly withdrew after mistakes were discovered, and in January 2019 NIST ended the first round of the process, announcing the 26 schemes that had made it into round two. In July 2020, after nearly 18 months of community focus on these schemes, NIST announced its final round candidates: 4 key-encapsulation mechanisms and 3 signature schemes, with the intention of choosing at least one of each type at the end of the process. Although these schemes have all been thoroughly vetted, they each come with different strengths and weaknesses that are useful to understand.

The 4 remaining key-encapsulation schemes are NTRU, a classic lattice-based scheme using techniques dating back to the 90s, CRYSTALS-Kyber, a more modern lattice scheme based on a problem called Module LWE, SABER, a yet more modern lattice scheme based on a ‘rounded’ problem called Module LWR, and Classic McEliece, a modernization of the code-based scheme from the late 70s. The three lattice schemes can be seen as choosing different trade-offs between security and efficiency: on the one hand, SABER performs better than CRYSTALS-Kyber, which in turn performs better than NTRU. Conversely, NTRU has the most well-established security properties, whereas SABER relies on the Module LWR problem which is still not very well understood, with CRYSTALS-Kyber’s reliance on Module LWE once again placing it in the middle. Classic McEliece has worse performance than its lattice-based competitors, but could be standardized as a fail safe in case it turns out that the power of quantum attacks on lattice schemes was vastly underestimated; diversity in cryptographic assumptions can be a powerful tool in the face of the unknown. NIST has indicated that it is likely to initially select one of NTRU or CRYSTALS-Kyber to standardize, but a more thorough security analysis of SABER or quantum attacks on lattices could alter their course.

Turning our attention to signature schemes, we once again see that lattices are the likely future of PQC. Two of the three remaining candidates use lattices: CRYSTALS-Dilithium, a signature scheme developed by the same team as CRYSTALS-Kyber using similar techniques, and Falcon, an NTRU-like signature scheme packaged with some novel technical contributions. The other remaining scheme, Rainbow, is a multivariate scheme with worse overall performance than its lattice counterparts. Much like with Classic McEliece, NIST believe there is benefit in including non-lattice candidates, and the relative youth of Rainbow as a scheme means it is possible that it could be improved in the near future, or be made to excel in particular use cases. Again, NIST have suggested they are likely to standardize one of the two lattice schemes: Falcon is arguably a little more efficient, but comes with substantial technical complications in implementation that may result in it being regarded as unnecessarily complicated compared to the more elegant design of CRYSTALS-Dilithium, which still performs very well.

NIST also choose 8 schemes as “alternates”, schemes that they believe should receive further study since NIST are open to the possibility of their standardization in the future. The alternates chosen have a mix of desirable characteristics and noteworthy drawbacks: for example, they might be highly secure but quite inefficient, require more streamlining, or lack the necessary levels of exposure to determined attackers that is typical when establishing confidence in cryptographic security. Of the five key encapsulation alternates, four can best be understood by comparison to finalists: FrodoKEM can be seen as a more conservative version of CRYSTALS-Kyber that runs slower but is more secure, and NTRU Prime is an NTRU-like scheme that works around a conjectured weakness in other lattices techniques and a small cost in efficiency. Bike and HQC are two modern code-based cryptography schemes that perform more efficiently than Classic McEliece but who introduce new security assumptions and thus need substantially more scrutiny. The fifth scheme, SIKE, is the only remaining isogeny-based cryptosystem in the process. NIST have deemed SIKE to be lacking sufficient maturity to be standardized, but its uniqueness mean it is a candidate likely to undergo serious research efforts in the future. For the signature alternates, only one bears any similarity to the finalists: a scheme known as GeMSS, which competes closely with Rainbow but is expected to be less desirable in the majority of applications. The other two alternates, SPHINCS+ and Picnic, are based on hash functions. Both run pretty slowly but have extremely strong security guarantees, so may be standardized for use with highly sensitive data or in case of quantum ‘Armageddon’, where it turns out that quantum computers can break cryptography which uses anything more complicated than simple primitives like hash functions.

Outlook

Quantum computers are coming, and anyone interested in adequately securing their data must start looking to the future now. For the curious observer, keeping an eye on the NIST process will keep them up to date. From now until when NIST chooses their standards, which they expect to take around 12-18 months, the 7 finalists will be subject to enormous levels of examination, and over time it will likely become clear which candidates are expected to make the cut. For the more proactive out there, there’s never been a better time to consider what algorithms best support your use cases. All the schemes remaining in the NIST process have unique selling points, but initial standards are likely to focus on the schemes that are the most well-balanced. For example, if you work with data that is particularly sensitive, it might be worth getting involved in discussions about the more conservative schemes. Conversely, if you need to secure devices that might not have access to much computing power, such as IoT devices, you may need to consider which schemes can be operated in particularly lightweight settings; perhaps a good subject for another blog post!

Additional resources

Whitepaper

Enabling Cloud Connectivity in Resource-Constrained IoT Devices: A Wi-Fi Module and Low-End MCU Approach

This paper explores an affordable and efficient approach to embedded device cloud connectivity using a Wi-Fi module with a low-end microcontroller unit…

Read more

Whitepaper

Hardware Root of Trust: QDID PUF & Attopsemi OTP

This whitepaper presents a simplified, secure, and ready-to-use root-of-trust solution for embedded devices by integrating Attopsemi’s I-fuse technology…

Read more

Whitepaper

QuarkLink for Industrial PCs

This white paper introduces how Crypto Quantique’s QuarkLink SaaS device security platform is used with industrial PCs running Linux.

Read more